What is A/B testing and why you should do it

Erik Župančić

9 min read

Working in a digital product agency means that you’re surrounded by a bunch of different people with different ideas on how to improve our client’s products and help them achieve their business goals. But how can we properly measure the effect of those changes on our products?

In this blog post we’ll try to explain what A/B testing is and how it’s used to optimise digital products and help clients achieve their business goals.

How you should not test things

Before we start talking about A/B testing, let’s take a look at an example of a bad approach to measuring. For this, we will use our own website as an example. Imagine we want to increase the number of business inquiries we get from a contact form on our website. The first thing we want to do is test how the colour of the CTA button affects the CTR.

Since it’s quite an easy thing to change, we decide to just do it, and then let it simmer for two weeks until we get enough data to draw a conclusion. After two weeks we take a look at the data and see that the CTR has increased from 0.5% to 1%. Upon seeing the very positive results, management is opening champagne, the social media team is publishing success stories and the product team is patting themselves on the back.

But can we actually draw any conclusions from this type of experiment? The answer is: not really.

Looking at the data visualization we can clearly see that the CTR has increased in the testing period. But the issue with doing experiments this way is that we’re ignoring the effect of other factors or conditions that could affect the measurements. Let’s say that after the release these things also happened:

- The CEO of the company had a great interview on a popular podcast

- There was a successful digital marketing campaign

- We’ve reduced our rates by 20%

- A natural increase in inquiries due to seasonality

Doing the experiment this way makes it impossible to measure the effect of the button color change, since the observed increase in CTR could be due to any of the mentioned factors. As far as we know, if the button was still blue we might have had an even higher CTR increase.

Fortunately, there’s a better way to do the same experiment, and it’s called A/B testing.

What is A/B testing?

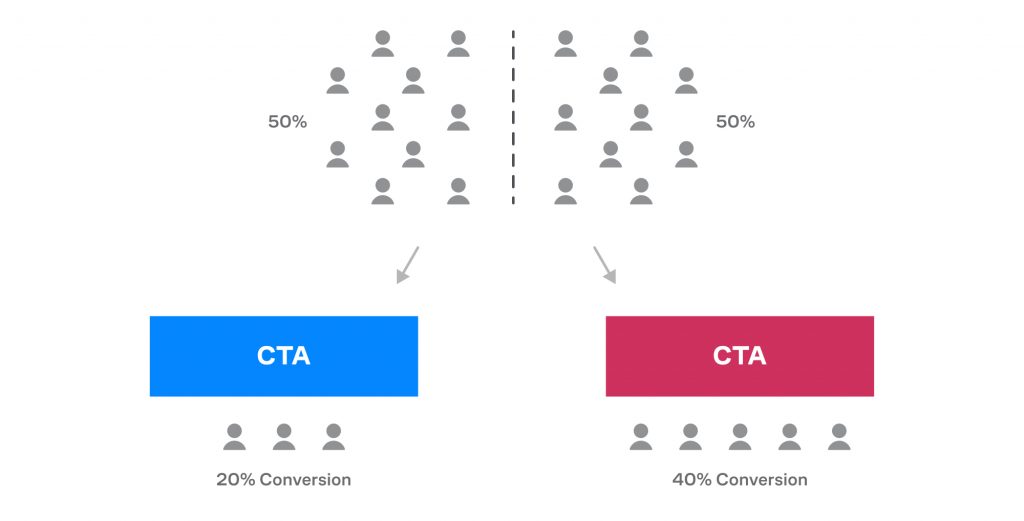

An A/B test is an experiment in which we directly compare the two different versions of a digital product against each other to see which one performs the best.

In the most common type of A/B test we will do this by randomly showing two different versions of the digital product to different users.

Some users will get the control group, which is the current version of the product, other users will get a version called “treatment”, which typically includes some kind of change.

For the example we had in the previous section, this means that we would show the version with a blue button to 50% of users, and the version with a red button to the other 50%.

Since we’re running both versions at the same time and under the same conditions, we can be sure that any differences between the two groups are either because of the change we’ve made, or just because of pure dumb luck. In other words, when testing this way we don’t really care about other factors.

What are the benefits of using A/B testing?

To put it simply, it’s one of the most efficient ways to optimize your conversion rates and squeeze out as much as possible from your existing traffic.

- It’s a way to cull failures and learn what works

Instead of relying on opinions and unclear results, we’re precisely measuring the effects of changes to the product. This means that we’re always sure that we’re culling failures and implementing successes.

But in the case of A/B testing, even failures are valuable. Learning what doesn’t work shows us the direction in which we should move in further cycles of testing.

- It’s cheaper than the alternatives

If you think about it, there’s no company in the world with a 100% conversion rate. This means that it’s possible to increase the number of conversions without acquiring new users, which is usually quite expensive.

Compared to spending a bunch of money on marketing, tweaking and optimizing your website for conversions is probably much cheaper.

- It’s very low risk and there’s no need to make tough decisions

Remember the “wrong” example we had at the beginning? Imagine how many discussions and meetings those people had to do before deciding to implement some feature, just to ensure that it won’t ruin the business?

Well, with A/B testing you don’t have to worry about any of that. Just test out an idea on a small percentage of traffic and then afterwards decide if you want to implement it or not based on the results.

When and why you should not A/B test?

Although it’s one of the most recommended methods for website optimization, it requires a relatively high volume of traffic to be successful. So the websites with lower traffic should probably use some other method, like user surveys, to identify problem areas.

How do we conduct A/B tests?

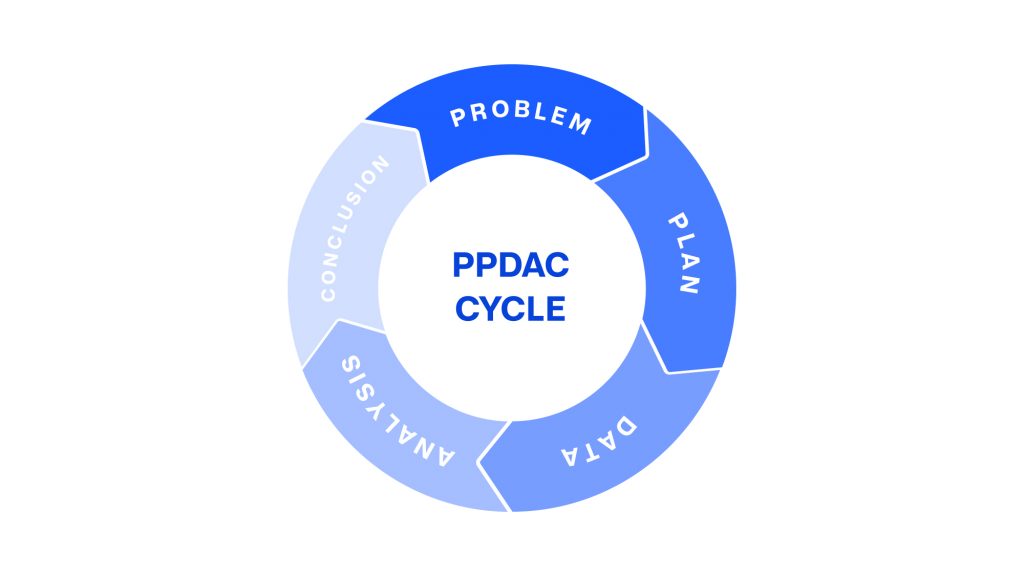

The easiest way to explain the A/B testing process is using the PPDAC problem-solving cycle.

The cycle consists of five phases:

- The Problem

In this phase we’re conducting thorough research on how a website is performing at the moment, by using either quantitative (Google Analytics) or qualitative tools (Hotjar). By learning more about the website and how users interact with it, we’re able to identify problem areas on the website.

- Plan

This is the phase in which we’re trying to figure out how to solve problem areas. It’s often organized as a brainstorming workshop that involves people from different backgrounds. The output of this phase should be a clear set of hypotheses we want to test and a plan on how to conduct the A/B test.

- Data

In this phase we’re running the experiment and collecting data. The duration of this phase depends on the type of website, the volume of traffic, as well as the minimum improvement we want to detect.

- Analysis

Once we’ve collected enough data, it’s time to analyze it. In this phase we’re applying statistical methods to proclaim the winner of the A/B test. Even if the test remains inconclusive, we can still draw valuable insight from it and use it for subsequent iterations.

- Conclusion

In the final step we’re communicating the results of the A/B test to the stakeholders. Those results are the basis for deciding which version should be implemented. No matter what decision has been made, the insights derived from the experiment will be used in the next cycle.

Conclusion

A/B testing is one of the essential tools used for developing and improving any user-oriented digital products.

By properly practicing A/B testing we’re using data and objective facts to determine if something is effective or not, instead of relying on subjective impressions and gut feelings.

A/B testing is not something that exists in a vacuum. It’s a small cog in a much larger process we’ve implemented to build and optimise different digital solutions.

Erik Župančić

Data Analyst. Turning data into product insights and decisions. Speaks in numbers and thinks in graphs.

Explore more

articles

We shape our core capabilities around lean product teams capable of delivering immense value to organisations worldwide

Got a project?

Let's have a chat!

Zagreb Office

Radnička cesta 39

Split Office

Put Orišca 11, 2nd floor

Contact info

Split+Zagreb, Croatia

+385 91 395 9711info@profico.hr